Global Warming Myths

Note: this discussion of myths and misconceptions is not

"balanced" nor comprehensive nor scientifically rigorous, but it is

accurate to best of my knowledge and does not knowingly exclude any

assertions, pro or con, on the topics discussed. Myths are not

necessarily falsehoods although there are plenty of falsehoods made

and propagated by nonscientists on both sides. But I also consider as

myths assertions by scientists that are not supported by the evidence

but presented in a way that creates a false impression to

nonscientists that the evidence for the conclusion actually does

exist. The classic examples are the "hockey stick" graphs of

temperature and greenhouse gases intended to convey the impression of

historic natural stability by juxtaposing older, naturally or

statistically smoothed proxy measurements with modern instrument

measurements or higher resolution, modern proxies which are able to

show the current spikes.

x

Myth: Neo-Gaianism

The myth that that the earth will regulate itself towards an optimum

for life no matter what humans do to the atmosphere. The term Gaia

refers to the biosphere regulating itself, but the neo-Gaiaists apply

the concept to geophysical processes as well. Opposite of Tipping Point

The myth that that the earth will regulate itself towards an optimum

for life no matter what humans do to the atmosphere. The term Gaia

refers to the biosphere regulating itself, but the neo-Gaiaists apply

the concept to geophysical processes as well. Opposite of Tipping Point

There is no doubt that man's effect on the environment can overwhelm

the ability of any local natural process to compensate. One simple

example: bulldoze a few acres of virgin ecosystem anywhere in the

world and then keep accessing it by vehicle or even by foot. In most

cases exotic (non-native) weeds will take over and in most cases

greatly slow or prevent natural restoration. If the topsoil washes

away natural restoration may be impossible forever.

The preceding outcome can be quite different once human intervention

is recognized and humans take steps to mitigate their actions.

Restoring native habitat is very doable once the ecological processes

are understood. Extended globally, the same principles apply except

that gases mix and spread across the globe quickly which deceptively

appears to mitigate their effect. But the global effects may or may

not be mitigated naturally, and may end up overwhelming the ability of

global natural processes to compensate. But like local effects, the

needed restoration actions will inevitably be well understood by using

global models. If it becomes necessary, models will reveal the most

cost effective cooling solutions. Regardless of which cooling methods

are needed or used or whether governments are involved or not, the

cooling, if needed, is not going to be provided by nature.

In the global warming debate the neo-Gaiaists conveniently adapt

Gaiaism to exclude their own actions and similar ones by other humans

since true adherence to Gaia would require diligent consideration of

all ecological effects before undertaking any action. There is no

longer any doubt that the actions of mankind have an effect on the

atmosphere, although the extent and the climate consequences are still

in doubt. The measured increases in CO2 can partly be explained as a

response to warming or other natural effects, but there is ample

evidence that human releases are part of the rise. The article

Why does atmospheric CO2 rise? points out the lag between rises in

the northern and southern hemispheres, the decline in 13C/12C ratio,

the decline in 14C, etc. This evidence, taken together, point to the

rise in CO2 being from fossil fuels. Although the amount of the CO2

rise coming from fossil fuels is in question (see Myth: all "extra" CO2 is human), the manmade

component is certainly not negligible and consequential rise in

temperature is also not a negligible amount.

Myth: Tipping Point

The hypothesis that the earth has reached or will soon reach a point

where greenhouses gases will cause predominantly positive feedbacks to

occur, for example warmth causing increases in greenhouse gases

causing more warmth. An offshoot of Gaianism and the opposite of Neo-Gaianism The creator of the Gaia theory,

James Lovelock, postulates a tipping point in his book

The Revenge of Gaia Noted scientist Jim Hansen describes a

tipping point scenario in a NY Times book review: The Threat to the

Planet:

...But if CO2 emissions are not limited and further

warming reaches three or four degrees Fahrenheit, all bets are

off. Indeed, there is evidence that greater warming could release

substantial amounts of methane in the Arctic. Much of the ten-degree

Fahrenheit global warming that caused mass extinctions, such as the

one at the Paleocene-Eocene boundary, appears to have been caused by

release of "frozen methane." Those releases of methane may have taken

place over centuries or millennia, but release of even a significant

fraction of the methane during this century could accelerate global

warming, preventing achievement of the alternative scenario and

possibly causing ice sheet disintegration and further long-term

methane release that are out of our control.

As can be seen in the discussion at RealClimate:

Runaway tipping points of no return

the science of climate tipping points is very loose. It consists

mostly of cherry-picking and hypothesizing positive feedbacks while

ignoring and refusing to hypothesize negative ones. For example, the

answer given to the question of negative feedbacks (#142 in the

previous link) was that positive feedbacks are overwhelming the

negative ones (i.e. it's ok to ignore negative feedback). But since

actual CO2 forcing can account for more than the observed warming even

without modeled water vapor feedback, it appears that some negative

feedbacks are already occuring (or a lot of negative forcing). The

IPCC 2007 report states that no large nonlinear feedbacks have yet

taken place for carbon since the carbon cycle flow percentages have

remained relatively unchanged since 1958. That doesn't address other

potential warming feedback loops such as methane, but short term

feedback is more likely to be negative (long term is more likely to be

positive) and short term effects are more easily countered than long

term ones. For example it is much easier and cheaper to get a little

short term cooling (if that becomes necessary) than to lower long term

warming (which will probably never become necessary).

The main problem with climate tipping point science is that water

vapor, by far the largest and fastest feedback, is not well modeled.

The reason is that water vapor is unevenly distributed throughout the

atmosphere by the weather and to determine the warming effect of that

water vapor (and cooling effect of some types of clouds) we must model

the weather in detail (although forecasting is not necessary, see Climate Forecasting is not Weather

Forecasting). Also the full extent of evaporation, condensation

and precipitation must be modeled since all provide large amounts of

warming and cooling at various levels of the atmosphere. Water vapor

as a greenhouse gas is unlikely to provide a tipping point since as

its concentration gets higher, its distribution becomes more uneven

which is a negative feedback. But it is also possible that water

vapor could become more evenly distributed especially at higher

altitudes which would provide positive feedback. We won't know for

sure until the resolution in climate models is improved.

Myth: If they can't predict the weather...

Longer term weather forecasting is very difficult because weather

systems are deterministically chaotic because

the weather is somewhat sensitive to initial conditions

(i.e. butterfly effect). It is possible to predict near term weather

because any small scale effects take time to propagate into large

scale changes. See

Numerical Weather Prediction inFAQ and

Chaos and Climate

Climate forecasting, on the other hand, does not depend on weather

forecasting in order to make predictions. Weather patterns matter to

climate and must be modeled in some way that isn't oversimplified (see

Oversimplified models), but the weather models

inside the climate models do not have to accurately predict weather

for any time or location. The must rather only depict weather with

enough overall fidelity to calculate its impact on climate. As a very

simple example, it doesn't matter if a weather model says it is 20

degrees at my house and 40 at your house or 40 at mine, 20 at yours,

or 30 at both if they both are averaged into a single climate

statistic. Likewise it doesn't matter at all if the rain comes on

tuesday or on thursday if what matters is the rainfall for the

week.

Myth: If it's this cold/warm GW must be false/true

Everyone agrees that natural variation is part of the weather and

climate, yet almost everyone seems to make this simple mistake over

and over: warm or unusual weather is proof of global warming or cold

weather must be proof that human-caused global warming or global

warming itself doesn't really exist. Mostly the comment is made

tongue-in-cheek, but often it is made as if it proves something.

One example of many: Lubos Motl implied the record cold in November

2006 was due to billions of years worth of natural variability

Florida, Yukon: record cold temperatures There's little doubt the

climate is getting warmer and indeed it may be just natural variability,

but the record cold doesn't prove that natural variability is the only

factor involved.

In another recent example, a poster at RealClimate noted the anomalous

weather around Hudson Bay in Feb 2006 and claimed model predictive

accuracy: Thundershowers in February over South Central Baffin

Island above the Arctic circle (approx. 65 degrees North), totally

unheard of But looking at the records for a Baffin Island station

at 66 degrees north

CAPE DYER A there are no February days with rain but there was one

in January 1977 (the record starts in 1959). Like 2006, 1977 was the

start of an El Nino and it would be interesting to know if that was

part of the model that was claimed to have predicted this event.

Myth: Pinatubo released more "greenhouse gases" than...

Typically finished with "mankind since the industrial revolution". It

is an attempt to obfuscate the water vapor from the volcano with long

lasting gases from mankind. It is nonetheless completely false since

the water vapor emitted by Pinatubo (491 Mt) was far less than the

20,000 Mt of water vapor emitted by fossil fuel burning each year (

http://www.hyweb.de/Knowledge/Vapour.htm) and both are dwarfed by

the natural 505,000,000,000,000 Mt (500,000 km3) in the natural water

cycle. In short, water vapor release by man, volanoes, or anything

other than evaporation and transpiration are trivial.

There are more specific and easily disproven claims about CO2 and

other gases, e.g.

Slicing with Occam's Razor: ...one Mt. Pinatubo sized volcano

puts out more CO2 in a day of belching than mankind in a year...

But by analyzing preeruption

magma vapor potential, emissions from the 1991 Pinatubo eruption

were 42 Mt of CO2 and the worldwide totals from

http://www.eia.doe.gov/oiaf/1605/gg97rpt/chap1.html are 150,000 Mt

natural and 7100 Mt manmade.

Myth: Current global

warming can be explained by...

As pointed out in this summary: Changes

in Solar Brightness Too Weak to Explain Global Warming, the solar

irradiance changes mentioned above are too small to have cause the

recent short term warming (since the 1970's), or the longer term

warming over the last 400 years as far as proxies for temperature and

solar activity can be gleaned from the record. As the article states,

there may be other changes in the sun that may cause warming

indirectly such as

On the Role of Cosmic Ray Flux variations as a Climate Driver: The

Debate but those larger changes are heavily cyclical (22 years)

and that cycle is not reflected in real world climate or weather.

Indirect influence by the sun such as altering the cosmic ray flux also

cannot explain

Global Warming on Mars or

Global warming on Jupiter (article also has links to warming on

other planets and moons) since the cosmic ray cloud formation

processes are unique to earth. Those extraterrestrial solar warmings

are relevant in only two ways: showing generically that planets can

warm (which is an obvious fact), or that the increased luminosity from

the sun is causing part of the warming on earth and other bodies in

the solar system. But the luminosity changes are small along with

their contribution to warming. Since temperature of a body (without

any other feedback effects) is proportional to the fourth root of the

radiation flux (see below) and the solar

variation over 2000 years has been about 0.1%, the corresponding

temperature change would be a little less than 0.2 C over 2000

years.

The "back of an envelope calculation" in

http://www.junkscience.com/Greenhouse/cause.html compares a 0.2%

increase in irradiation to a 0.2% increase in earth's temperature.

This is incorrect. As explained in Stefan-Boltzmann

law the energy radiated from a black body is proportional to the

4th power of the temperature of that body. The earth is in

equilibrium so the energy received from the sun equals the energy

transmitted back into space. The earth's black body temperature can

be calculated (without albedo or greenhouse effect) as going from:

4th root of ((1364 / 4) / (5.67 x (10^(-8)))) = 278.479243

at 1364 w/m2 to:

4th root of ((1367.23 / 4) / (5.67 x (10^(-8)))) = 278.643959

at 1367.23 w/m2 or a 0.2% increase in solar radiation causes

a 0.05% increase in temperature.

Henrik Svensmark's cosmic ray flux explanation for global warming

doesn't explain why there isn't a dramatic 22 year climate cycle since

the 22 year cycle (and correlating cycle in low clouds as Svensmark

demonstrates) dwarfs the long term decline in cosmic ray flux.

Performing Fourier transforms on local or global temperatures does not

reveal any significant 22 year frequency component. CO2 also does not

have a significant 22 year frequency component although it has a very

distinct seasonal fluctuation caused by the northern hemisphere

growing season. Cosmic ray flux changes may yet provide important

explanations of long term climate changes but it is unlikely that they

have had any significant impact on 20th century climate change.

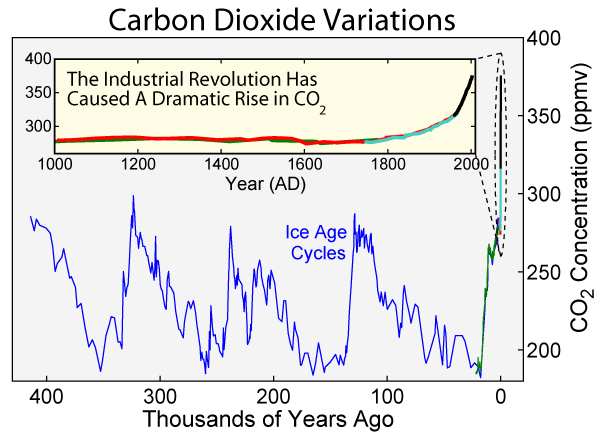

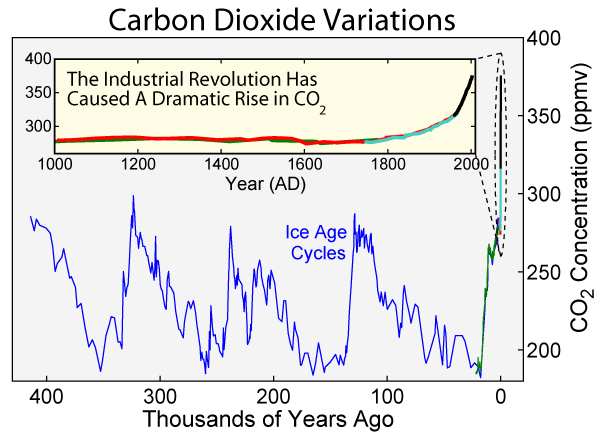

Milankovitch forcing is slow cyclic changes in the earth's orbit

and tilt that trigger large changes in climate and CO2. They are

illustrated by the cyclic changes in ice cores shown below. Although

the resultant changes are substantial shifting the climate from warm

periods to ice ages, they are very slow and could not account for any

short term temperature or CO2 changes and currently point to long term

(thousands of years) of cooling and diminishing CO2.

Myth: The

warmest year in the last...

The century claim may be true, but not proven in the way it is claimed

to be proven. To obtain the century claim, one has to downplay the

urban heat island effect. The UHIE is "officially" deemed to be

negligible in

Wikipedia but it is clearly not negligible when viewing the

difference between urban and rural stations. Often the difference is

reduced to statistics based on population which ignores more

significant factors of geography and development patterns versus the

station location. A lot of anecdotal evidence can be viewed here: Station

Data by clicking rural stations compared to neighboring cities,

but any realistic comparison would have to take into the local

geography and development patterns. Statistical studies comparing

"urban" and "rural" sites under various weather conditions (e.g. )

The Surface Temperature Record and the Urban Heat Island also do

not take these factors into account. The extent of UHIE can probably

be shown by satellite temperature measurements that have about half of

the warming of surface measurements over the time that satellites have

been used to measure global average temperature.

Part of the warming observed over the last 400 years is due to the end

of the Little Ice Age 400 years ago, and 12,000 years ago was the

depths of a real ice age. But despite the expected warming from those

events there is no reliable way to tell that recent years are the

warmest. The reason is that prehistorical temperature measurements

are obtained from proxies which contain varying amounts of natural

smoothing. The physics and biology that produces the temperature

proxy and how it is reflected by the smoothing of the data must be

analyzed to determined the "uncertainties" in the measurements, not

statistical comparisons between and within data sets. To make the

million year claims, older measurements are needed such as from ice

cores, with less complex physics, but with much less resolution so as

to easily miss a thousand year spike, much less a sub-century spike

like the current one. See below for a discussion of ice cores.

The recent measurements from most proxies show little warming, see

"Global Warming" Proxies and compare them to the graph from Mann

below. The "uncertainty" represented by the gray area in Mann's chart

is purely statistical, comparing proxy to proxy, not on the physics

and biology behind the measurements which matter. Comparing

measurements from ancient readings to modern ones within one proxy is

the only valid method to analyze temperature trends. To superimpose

the instrument record (red line) onto proxy average (blue line):  is to juxtapose exact instrument readings containing a spike with

proxy readings that will smooth out spikes (then further smoothed by

painting the blue line). It is misleading to imply that they are they

are equivalent measurements and there could therefore be no similar

spikes in the historical record. The extent to which the spikes were

smoothed is a function of the physics and biology of the proxy and

cannot be determined through statistical analysis of the data as was

performed to produce the figure above.

is to juxtapose exact instrument readings containing a spike with

proxy readings that will smooth out spikes (then further smoothed by

painting the blue line). It is misleading to imply that they are they

are equivalent measurements and there could therefore be no similar

spikes in the historical record. The extent to which the spikes were

smoothed is a function of the physics and biology of the proxy and

cannot be determined through statistical analysis of the data as was

performed to produce the figure above.

The main argument for obtaining the hockey stick shape from the proxy

data is that the past warming shown in various proxies are not

synchronized globally. For example, the regional warming in European

and North American proxies from roughly 1000-1400 AD is not seen in

many southern hemisphere and remote NH proxies. But most of those

proxies don't show the current warming either. The choice of proxies

is critical for Mann's graph particularly in matching up to the

instrument record. Regardless of its 20th century conclusions, the

hockey stick also tends to downplay natural climate variation,

particularly cooling, and thus hides natural warmings (e.g. before

1000) that are larger and faster than todays.

Myth: The most CO2 in 650,000 years

An even more blatant example of ignoring physics and publishing

improperly juxtaposed measurements as fact is CO2 in ice cores

compared to modern instrument measurements. A similarly unsupportable

claim for methane is:

The historic record of atmospheric CH4

obtained from ice cores has been extended to 420,000 years before

present (BP) (Petit et al., 1999). As Figure 4.1e demonstrates, at no

time during this record have atmospheric CH4 mixing ratios approached

today's values (

http://www.grida.no/climate/ipcc_tar/wg1/135.htm)

The ice

core used for the methane and CO2 claims is

Vostok Ice Core CO2 Data which contains very sparse measurements

of highly smoothed data. The instruments measuring the CO2 are not

in question, the problem lies in the minimum thickness of a slice and

how much time that slice represents. The CO2 measurement made from

that slice is then a distribution, perhaps roughly gaussian, of the

atmospheric CO2 present over that period of time and more since the

CO2 diffuses through the ice. An example from another ice core:

Proposed Drill Site... implies above figure 7 that hundreds of

years are compressed into 1 cm of ice. [Note, still looking for a

reference that would indicate the resulting resolution]. This vostok

data shows the readings to be between 1000 and 4000 years apart.

The higher resolution of more modern ice cores is completely

irrelevant although they are often juxtaposed as in the IPCC methane

example from above where the old, smoothed measurements shown in (e)

above are implied to be equivalent to modern ice core measurement

shown as dots above in (a). But the dots in (a) can be determined

with much higher resolution as the snow and ice is not nearly as

compacted and annual layers are still visible and analyzable.

The main problem with the conclusion CO2

'highest for 650,000 years' and charts like this one:

from wikipedia

is that they mislead nonscientists into believing that the ice cores

measurements provide proof of CO2 boundedness when they obviously

can't. The only way to show that the current spike is not natural is

to show that physical processes could not release the quantities of

CO2 seen today which is unlikely, or that if they did, that the

elevated CO2 levels would last for 1000's of years to be picked up in

the ice core record. Most AGW proponents choose the latter as seen in

the response to post 130 at Real

Climate

from wikipedia

is that they mislead nonscientists into believing that the ice cores

measurements provide proof of CO2 boundedness when they obviously

can't. The only way to show that the current spike is not natural is

to show that physical processes could not release the quantities of

CO2 seen today which is unlikely, or that if they did, that the

elevated CO2 levels would last for 1000's of years to be picked up in

the ice core record. Most AGW proponents choose the latter as seen in

the response to post 130 at Real

Climate

To calculate the impulse response from a spike of CO2,

one must add an increased diffusion of CO2 into oceans and increased

uptake into plants. The figure below shows flows at equilibrium, more

or less equal, although the numbers don't quite add up implying that

carbon is currently not in equilibrium. The atmosphere is measured to

have 3.8Gt of new carbon per year, but with the manmade addition of

6.2Gt and the other flows shown in the figure, there should be 4.6Gt

added to the atmosphere each year.  (from

http://cdiac.esd.ornl.gov/pns/graphics/c_cycle.htm). The impulse

response from a spike of CO2 similar to the current spike shows a

decline of CO2 back to 40% in about 50 years and 20-30% in 500 years

(see figure 4 in

http://isam.atmos.uiuc.edu/atuljain/publications/WuebblesEtAl.pdf)

The impulse response does not explain where such an impulse would come

from, but a pulse of CO2 similar to the present increase (if it ended

as abruptly as it has appeared) would be invisible in the older ice

core measurements.

(from

http://cdiac.esd.ornl.gov/pns/graphics/c_cycle.htm). The impulse

response from a spike of CO2 similar to the current spike shows a

decline of CO2 back to 40% in about 50 years and 20-30% in 500 years

(see figure 4 in

http://isam.atmos.uiuc.edu/atuljain/publications/WuebblesEtAl.pdf)

The impulse response does not explain where such an impulse would come

from, but a pulse of CO2 similar to the present increase (if it ended

as abruptly as it has appeared) would be invisible in the older ice

core measurements.

Myth: All "extra" CO2 is

human

The text deleted below was wrong. Simply put, the amount of human-created CO2

is about double the observed increase in the atmosphere. Essentially

nature absorbs about 1/2 of the man-made extra. It is true that this

varies by season (in NH summer nature absorbs more than man produces,

but in winter when the growing season stops, man catches up and passes

nature). It is also true that CO2 rises with warming and some warming

is natural.

Unlike what is implied in this article: How do we know that

recent CO2 increases are due to human activities?, there are

natural variations in C13/C12 that result from natural climate

changes. The article fails to quantify the natural changes (implies

they are zero) and thus fails to quantify the manmade changes and

validate the article title. This study

Implications of a 400 year tree ring based

13C/12C chronology concludes that "...shifts

in 13C/12C prior to 1850 resulted from a

climate-induced perturbation in the Ci/Ca ratio". In other words, the

temperature and humidity changed enough that changes in vegetation

caused changes in the 13C/12C ratio as measured

in the ice cores.

As shown in this poster:

http://www.holivar2006.org/abstracts/pdf/T3-032.pdf, the initial

decline in the 13C/12C ratio began in the 1700's

before the "industrial revolution". The supposed start of the

industrial revolution in 1850 is not significant looking at the carbon

emission data:

ftp://cdiac.ornl.gov/pub/ndp030/global.1751_2006.ems. The

amounts of anthropogenic carbon reached 0.1% of the natural carbon

flows in the 1870's and 1% in the 1950's making it unlikely that the

isotope ratio changes were anthropogenic until recently. Another

possible factor not included in this data is land use, but that is

historically small and hard to distinguish from natural "land use"

changes in historic data. In short, some part of the isotope ratio

changes are natural and some are anthropogenic, which suggests further

that part of the observed CO2 rise is of natural biotic (low ratio)

origins.

Myth: Only 0.28% of the

"greenhouse effect" is human

As pointed out in this article: Water

Vapor Rules the Greenhouse System, water vapor dominates the

greenhouse effect. True, but irrelevant for consideration of human

effects. The science is straightforward, see Water vapour:

feedback or forcing?. Removing CO2 from the atmosphere would

leave about 90% of the greenhouse effect. Removing water vapor would

leave about 65%. A similar table is in the references to the Water

Vapor Rules... article:

http://www.eia.doe.gov/cneaf/alternate/page/environment/appd_d.html.

The quote that 95% of the earth's greenhouse effect comes from water

vapor is incorrect, it only applies to the troposphere and does not

distinguish between forcing and feedback. It is the warming from rest

of the greenhouse gases that causes the water to evaporate and cause

its warming. A simple example should suffice: if the earth's

atmosphere contained no CO2, all the water vapor would quickly freeze

and stay frozen regardless of any plausible solar forcing changes.

The bottom line is that the 10% or more of GH effect from CO2 is being

divided artificially into a human and nonhuman component. Since the

models are tuned and accurate to today's CO2 concentrations, we would

need to estimate the percentage of CO2 of human origin. As seen in

the section above, it's not 100% of the increase from preindustrial

times. But nor is it zero. Splitting the difference would mean 1/2

of the 30% (increase) of the 10% of the total GH effect or 1.5%, not

0.28% But the real bottom line is that the number isn't meaningful.

Oversimplified models

Both sides in the global warming debate have a tendency to

oversimplify models. The most common flaw is to substitute

assumptions or real-world measurements for a model parameter, then use

the model to make predictions without modeling the parameter. For

example,

earth's albedo can measured using satellites and inserted into a

simple energy balance model. But those albedo measurements cannot be

projected in a model without detailed simulation of weather. Some

specific modeling problems are mentioned here:

http://climatesci.colorado.edu/2006/05/11/uncertainty-identified-in-gcms-with-respect-to-albedos/

such as under and overestimation of albedo. A small error in modeled

parameters like albedo can lead to large errors in model results.

Using the Stefan-Boltzmann equation from above (itself a gross

oversimplification):

the 4th root of (((1 367 / 4) * 0.7) / (5.67 x (10^(-8)))) = 254.862463

and the 4th root of (((1 367 / 4) * 0.707) / (5.67 x (10^(-8)))) = 255.497244

or a 1% decrease in albedo can account for more than a 0.6 degree C

temperature increase. The observed albedo can fluctuate more than

that in a year mainly from volcanic eruptions as shown here:  but any

projection of albedo by itself is an oversimplification since the

albedo changes come mostly from cloud changes which change the heat

trapped by the greenhouse effect, convection, and heat released from

the water cycle.

but any

projection of albedo by itself is an oversimplification since the

albedo changes come mostly from cloud changes which change the heat

trapped by the greenhouse effect, convection, and heat released from

the water cycle.

There are numerous examples of model failure typically explained away

as weather which is not predictable versus climate which is. One

recent example is the lack of prediction of the Recent

Cooling of the Upper Ocean But climate modelers also attempt to

match model results with reality by postulating ocean heat storage

(e.g.

http://naturalscience.com/ns/articles/01-16/ns_jeh3.html Sydney

Levitus (Reference 4) has analyzed ocean temperature changes of the

past 50 years, finding that the world ocean heat content increased

about 10 watt-years, consistent with the time integral of the

planetary energy imbalance. Levitus also found that the rate of ocean

heat storage in recent years is consistent with our estimate that the

energy balance of the Earth is now out by 0.5 to 1 W/m2

But without warming oceans there is no longer an explanation of where

the extra energy is going to maintain a continuous imbalance of energy

(except for certain volcanic events) as shown in their figure 5.

Clearly their model is missing or inaccurately modeling large amounts

of energy transfer.

Oversimplified Solutions

Many scientists who maintain that the current models are projecting

undesirable environmental effects (e.g. rising sea levels) also insist

that political action be taken immediately to reduce CO2 output.

While this may be a worthy goal for other environmental and

geopolitical reasons, those benefits should also be compared against

the socioeconomic costs of CO2 reduction. When benefits are

postulated with no consideration of costs that is another example of

an oversimplified model. Considering the costs of potential warming

consequences like the rising sea levels would lead to consideration of

warming mitigation strategies rather than CO2 reduction for its own

sake. For example, mechanisms to increase albedo could be devised

that would be a lot less expensive than CO2 reduction for a given

amount of cooling and that cooling would take place much sooner. Also

smaller scale alternatives to CO2 reduction can be considered that

allow a range of localized political bodies to think globally but act

locally where the economic tradeoffs can be more easily considered.

CO2 alternatives are generally not factored into climate models, for

example the warmer and drier conditions downwind from large wind

farms. Some advocates of hybrid cars do not consider the energy costs

to manufacture and ship the batteries nor account for their limited

lifespan. The results of this study:

Hummer Over Prius are heavily biased by a questionable three times

longer lifespan for the Hummer, but the Hummer doesn't have hybrid

parts and complexity. Other conclusions are less intuitive, for

example a bicycle with a motor might be less energy intensive than one

run by food:

http://www.ebikes.ca/sustainability/Ebike_Energy.pdf Combined with

the uncertainties and known inaccuracies of the climate models, the

substitution of some limited aspect of CO2 production

(e.g. electricity use) for warming or "carbon credits" to reduce

global warming impact is absurdly oversimplified and in many cases,

false and counterproductive.

Ultimately it will become routine for the general public to have their

own models telling them the consequences of all of their relevant

actions, particularly their use of energy or items with high energy

inputs. But this will require full integration of environmental

inputs and outputs along with sufficiently detailed modeling to

account for small changes. An economic incentive would have to be

added to the model, e.g. a tax on activities with warming

consequences, to encourage changes in behavior if those are necessary

(although politicians would end up making that decision, not

scientists, so it is vital to make the models transparant and publicly

available). There is no doubt the current models are not able to

model warming consequences accurately or even roughly for individuals

and companies. But there is also no doubt that warming models will be

accurate in 20 years or so with better science and greatly increased

computer power.

The myth that that the earth will regulate itself towards an optimum

for life no matter what humans do to the atmosphere. The term Gaia

refers to the biosphere regulating itself, but the neo-Gaiaists apply

the concept to geophysical processes as well. Opposite of Tipping Point

The myth that that the earth will regulate itself towards an optimum

for life no matter what humans do to the atmosphere. The term Gaia

refers to the biosphere regulating itself, but the neo-Gaiaists apply

the concept to geophysical processes as well. Opposite of Tipping Point is to juxtapose exact instrument readings containing a spike with

proxy readings that will smooth out spikes (then further smoothed by

painting the blue line). It is misleading to imply that they are they

are equivalent measurements and there could therefore be no similar

spikes in the historical record. The extent to which the spikes were

smoothed is a function of the physics and biology of the proxy and

cannot be determined through statistical analysis of the data as was

performed to produce the figure above.

is to juxtapose exact instrument readings containing a spike with

proxy readings that will smooth out spikes (then further smoothed by

painting the blue line). It is misleading to imply that they are they

are equivalent measurements and there could therefore be no similar

spikes in the historical record. The extent to which the spikes were

smoothed is a function of the physics and biology of the proxy and

cannot be determined through statistical analysis of the data as was

performed to produce the figure above.

from

from  (from

(from  but any

projection of albedo by itself is an oversimplification since the

albedo changes come mostly from cloud changes which change the heat

trapped by the greenhouse effect, convection, and heat released from

the water cycle.

but any

projection of albedo by itself is an oversimplification since the

albedo changes come mostly from cloud changes which change the heat

trapped by the greenhouse effect, convection, and heat released from

the water cycle.